The Opik Scorer Subflow

This document provides a detailed breakdown of the "Opik Scorer" subflow, a critical component in the evaluation harness. This subflow is a self-contained module designed to evaluate the quality of an AI agent's response using the powerful "LLM as a Judge" pattern.

Purpose

The primary purpose of the Opik Scorer is to programmatically and objectively assess an agent's answer for relevance. It takes the original question and the agent's answer, sends them to a powerful Language Model (LLM) for evaluation, and formats the resulting score into a structure that can be ingested by the Opik platform.

Inputs & Outputs

The subflow is designed with a simple and clear interface:

- Input (msg.original_input): The user's original question (a string).

- Input (msg.payload): The AI agent's final generated answer (a string).

- Output (msg.feedback_scores): An array containing the score and reason, formatted specifically for the Opik API.

How It Works: A Step-by-Step Breakdown

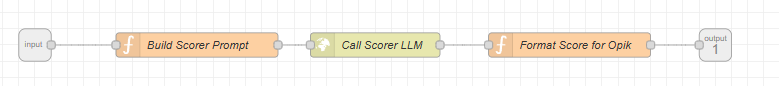

The subflow consists of three main stages: building the prompt, calling the judge, and formatting the result. You can download this Node-RED subflow here. (Right-click and "Save link as..." to download)

![Opik Scorer Subflow Details]

Step 1: Build Scorer Prompt

This function node is the heart of the scorer. Its job is to create a highly specific set of instructions for a powerful LLM (the "judge") to follow.

- Gathers Evidence: It takes the question, the answer, and any context that the agent may have retrieved from a knowledge base.

- Constructs the System Prompt: It builds a detailed

systemPromptthat gives the judge its persona and instructions. This is the key to getting a reliable and structured evaluation. The prompt commands the judge to:- Act as an expert:

YOU ARE AN EXPERT IN NLP EVALUATION METRICS... - Follow clear instructions: Analyze the context, evaluate the answer's alignment, and assign a score from 0.0 to 1.0.

- Return a specific JSON format: This is the most critical instruction. It forces the LLM to provide its output in a predictable, machine-readable JSON object, eliminating the variability of natural language responses.

- Act as an expert:

{

"answer_relevance_score": 0.85,

"reason": "The answer addresses the user's query but includes some extraneous details..."

}

- Prepares the API Call: It assembles the final payload for the http request node, including the system prompt, the user prompt (containing the actual data), and the necessary authentication headers.

Step 2: Call Scorer LLM

This is a standard http request node that performs one simple but vital task: it sends the meticulously crafted payload from the previous step to the specified LLM API endpoint (e.g., OpenAI's gpt-4o). The LLM then executes the instructions and returns its verdict in the requested JSON format.

Step 3: Format Score for Opik

The raw JSON response from the judge is almost perfect, but it needs to be translated into the exact format that the Opik platform expects. This function node acts as that final translator.

- Safely Parses the Response: It uses a

try...catchblock to safely parse the JSON content from the LLM's response. If there's an error or the LLM fails to return valid JSON, it defaults to a score of 0.0 and a "Scoring failed" message, preventing the entire flow from crashing. - Performs the Transformation: It maps the fields from the judge's response to the structure required by Opik.

Input from Judge:

{

"answer_relevance_score": 0.85,

"reason": "The answer was relevant."

}

Formatted Output for Opik:

msg.feedback_scores = [

{

"name": "AnswerRelevance",

"value": 0.85,

"source": "sdk",

"reason": "The answer was relevant."

}

];

- Restores Original Payload: In a crucial final step, it restores the agent's original answer to

msg.payload. This ensures that downstream nodes in the main flow can access the agent's answer for other purposes, such as logging or final reporting.

Advanced Usage & Customization

This subflow is highly modular and can be adapted to evaluate for different criteria. To customize it, you would primarily edit the "Build Scorer Prompt" node:

- Change the Metric: Modify the

systemPromptto instruct the judge to evaluate for a different metric, such as "Helpfulness," "Toxicity," or "Faithfulness" (i.e., whether the answer is grounded in the provided context). - Update the Output Key: If you change the metric, make sure to also change the expected JSON key in the prompt (e.g., from

answer_relevance_scoretohelpfulness_score). - Update the Formatter: Finally, you must update the "Format Score for Opik" node to look for your new key (e.g.,

llmResponse.helpfulness_score) and set the appropriate name in the final output array (e.g.,name: "Helpfulness").