Running an Evaluation in Opik using Node-RED

This tutorial explains how to use a pre-built Node-RED flow to run an evaluation in your Opik service. This powerful flow takes an existing dataset, runs it against a model or knowledge base, and generates a detailed evaluation report.

Prerequisites

Before you begin, ensure you have the following set up:

- An Existing Opik Service: You must have a running Opik service. You can create one by following the Opik Service in UBOS Tutorial.

- A Created Dataset: You need a dataset within your Opik service to evaluate. You can create one using the "Creating Dataset in Opik using Node-RED" flow.

- Required Credentials in Node-RED: The flow relies on several global variables being configured in your Node-RED environment. You must set:

opik_url: The full base URL of your Opik service.opik_username: The username for your service.opik_password: The password for your service.openaiKey: Your API key for the language model used in the evaluation.chroma_link: The connection path for your ChromaDB instance. For details on how to set these, refer to the "Configuring Global Environment Variables in Node-RED" guide.

- Node-RED Setup: This specific Node-RED evaluation flow must be imported and running. You can download this flow here. (Right-click and "Save link as..." to download)

Understanding the Workflow

This flow is designed to handle potentially long-running evaluation tasks. While it looks simple from the outside, it uses a sophisticated pattern to manage the process.

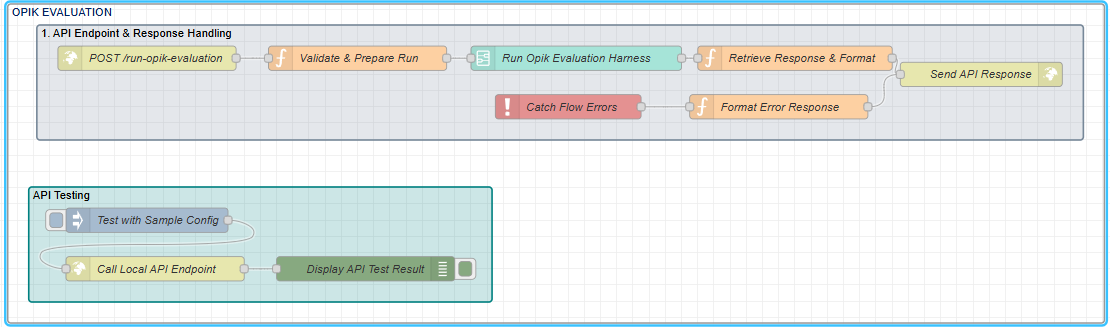

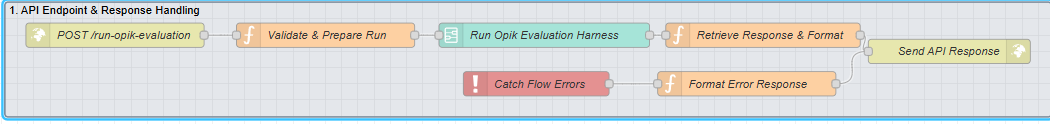

API Endpoint & Response Handling (Grey Group): This is the public-facing part of the flow. It validates the incoming request, initiates the evaluation, and—most importantly—holds the API connection open until the entire evaluation is complete before sending a final response. It also contains robust error handling.

API Endpoint & Response Handling (Grey Group): This is the public-facing part of the flow. It validates the incoming request, initiates the evaluation, and—most importantly—holds the API connection open until the entire evaluation is complete before sending a final response. It also contains robust error handling.

Core Evaluation Harness (Subflow - Run Opik Evaluation Harness): The Validate & Prepare Run node passes the request to a complex subflow (the "harness"). This is where the main work happens:

Core Evaluation Harness (Subflow - Run Opik Evaluation Harness): The Validate & Prepare Run node passes the request to a complex subflow (the "harness"). This is where the main work happens:

- Fetching the specified dataset from Opik.

- Looping through each item in the dataset.

- Running the evaluation logic (e.g., comparing a model's answer to the expected_output).

- Scoring the results.

- Generating a final, comprehensive report.

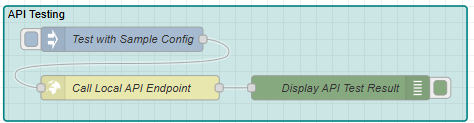

API Testing (Dark Green Group): A dedicated section with a manual Inject node that allows developers to trigger a test run directly from the Node-RED editor without needing an external tool.

API Testing (Dark Green Group): A dedicated section with a manual Inject node that allows developers to trigger a test run directly from the Node-RED editor without needing an external tool.

Step-by-Step Guide to Running an Evaluation

Step 1: Trigger the Evaluation Flow

You can start the process in two ways: via an API call (for automated workflows) or a manual click (for testing).

Method A: Trigger via API Endpoint

Send a POST request to the evaluation endpoint. This is the standard way to integrate this process into other applications.

URL: http://<your-node-red-ip>:1880/run-opik-evaluation

Method: POST

Body (JSON): You must provide the name of the dataset to be evaluated and a name for the new experiment that will be created.

{

"opik_dataset_name": "documents-qa-dataset-1753701963438",

"opik_experiment_name": "My First Evaluation Run"

}

Method B: Manual Trigger via the Inject Node

For quick tests, use the built-in testing mechanism.

Find the dark green block labeled "API Testing".

Locate the Inject node named "Test with Sample Config".

Click the square button on the left side of this node to start the flow.

This will trigger the entire evaluation using the default dataset and experiment names defined in the node's payload.

Step 2: The Evaluation Process

Once triggered, the flow begins its work. While the main flow looks simple, it immediately passes control to the "Opik Evaluation Harness" subflow, where the core logic resides. Here’s a detailed breakdown of what happens inside that harness.

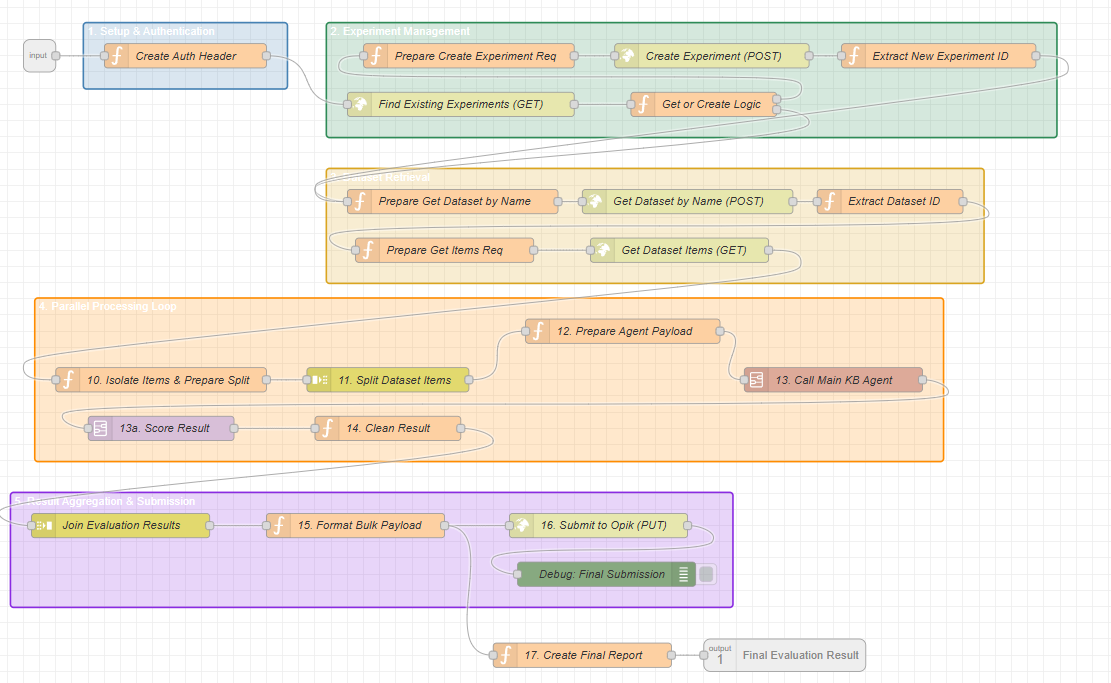

A. Initial Setup (Inside the Harness)

Authentication: The first step is to create a Basic authentication header using the opik_username and opik_password you configured in the global variables. This header will be used for all subsequent API calls to your Opik service.

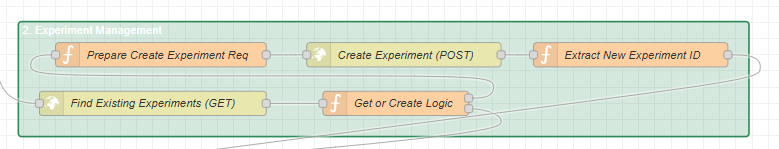

Experiment Management (Get or Create): The flow intelligently avoids creating duplicate experiments. It first performs a GET request to fetch all existing experiments in your Opik project.

If an experiment with the name you provided (opik_experiment_name) already exists, the flow retrieves its ID and proceeds.

If not, it performs a POST request to create a new experiment and then extracts the new experiment_id to use for the run.

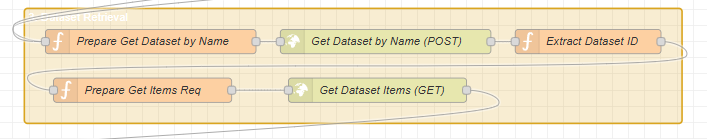

Dataset Retrieval: The flow uses the opik_dataset_name to fetch the specific dataset and all of its items (the individual questions and expected answers) from your Opik service.

B. The Core Evaluation Loop

This is where the magic happens. The flow uses a parallel processing pattern to work efficiently.

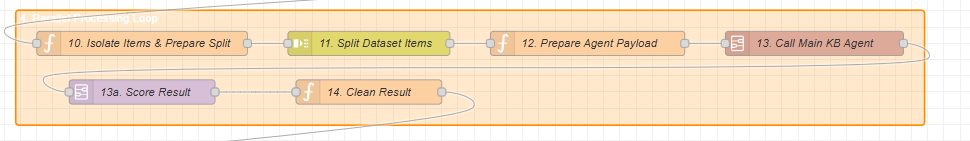

Split Items: The list of all dataset items is fed into a Split node. This node breaks the single large list into many individual messages, one for each item (i.e., for each question). This allows every question to be processed independently and in parallel.

For Each Individual Item, the following occurs:

i. The Agent Answers the Question: The input (the question) from the dataset item is sent to the "Main KB Agent" subflow. This agent is a sophisticated conversational AI. Its job is to:

- Understand the user's question.

- Decide if it needs more information.

- Use its

search_knowledge_basetool to query your ChromaDB vector store. - Formulate a final answer based on the retrieved knowledge.

The agent's generated answer is then passed to the next step.

Advanced: Using Your Own Agent This evaluation harness is designed to be flexible. You can replace the default "Main KB Agent" subflow with your own custom-built agent to evaluate its performance.

However, if you do this, you must also adjust the "12. Prepare Agent Payload" node. This node's purpose is to transform the data from your Opik dataset item into the precise JSON structure that the agent subflow requires as its input. Be sure to modify this node to match the specific input requirements of your custom agent.

ii. The Judge Scores the Answer: The result from the agent is then passed to the "Opik Scorer" subflow. This subflow acts as an impartial AI judge.

- It receives the original question and the agent's generated answer.

- It uses a specialized LLM prompt (e.g., with gpt-4o) to evaluate how relevant the agent's answer was to the question.

- It returns a numerical score (e.g., 0.85) and a text reason for its score. This feedback is formatted perfectly for the Opik API.

C. Aggregation and Final Submission

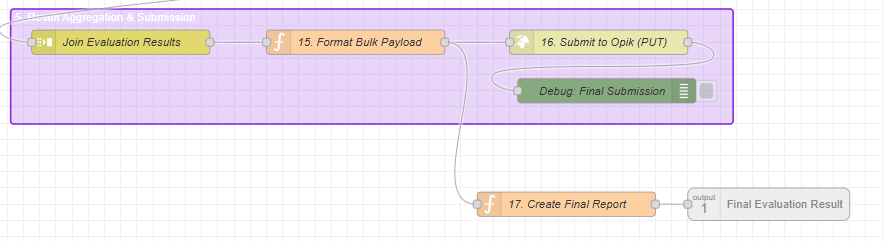

Join Results: After every single item has been individually answered by the agent and scored by the judge, a Join node collects all the separate results back into a single, large array.

Format Bulk Payload: This array of scored results is formatted into a final, large JSON payload. It prepares a single, efficient PUT request containing all the evaluation data for every item.

Submit to Opik: This final payload is sent to your Opik service in one bulk API call, populating the entire experiment with the detailed results, scores, and traces.

By processing items in parallel and submitting the results all at once, the flow is designed to be both fast and efficient, even with very large datasets.

By processing items in parallel and submitting the results all at once, the flow is designed to be both fast and efficient, even with very large datasets.

Step 3: Review the Final Report

When the evaluation is finished, the "Retrieve Response & Format" node retrieves the original API connection using the runId and sends the final report back to the client.

If the evaluation was successful, you will receive a 200 OK status and a JSON response containing a summary and detailed results of the experiment.

Example Success Response:

{

"status": "success",

"message": "Evaluation completed successfully for experiment 'evaluation_from_test_button'.",

"experiment_name": "evaluation_from_test_button",

"dataset_used": "documents-qa-dataset-1753701963438",

"items_evaluated": 10

}

If an error occurred at any stage (e.g., the dataset name was not found), the "Catch Flow Errors" node will ensure you receive a clear error message with an appropriate status code (e.g., 400 or 500).

You can now navigate to the Experiments section in your Opik service to view the detailed, line-by-line results of your evaluation.